AI is revolutionizing industries—enhancing customer service, automating financial decisions, and even assisting in medical diagnoses. Boardrooms worldwide are abuzz with AI strategies, and business leaders are racing to implement AI solutions to stay ahead of the competition.

However, amid this rapid adoption, a critical aspect is often overlooked: AI security.

While organizations fortify their firewalls and tighten access controls, a significant vulnerability remains largely unaddressed.

Enter the vector database.

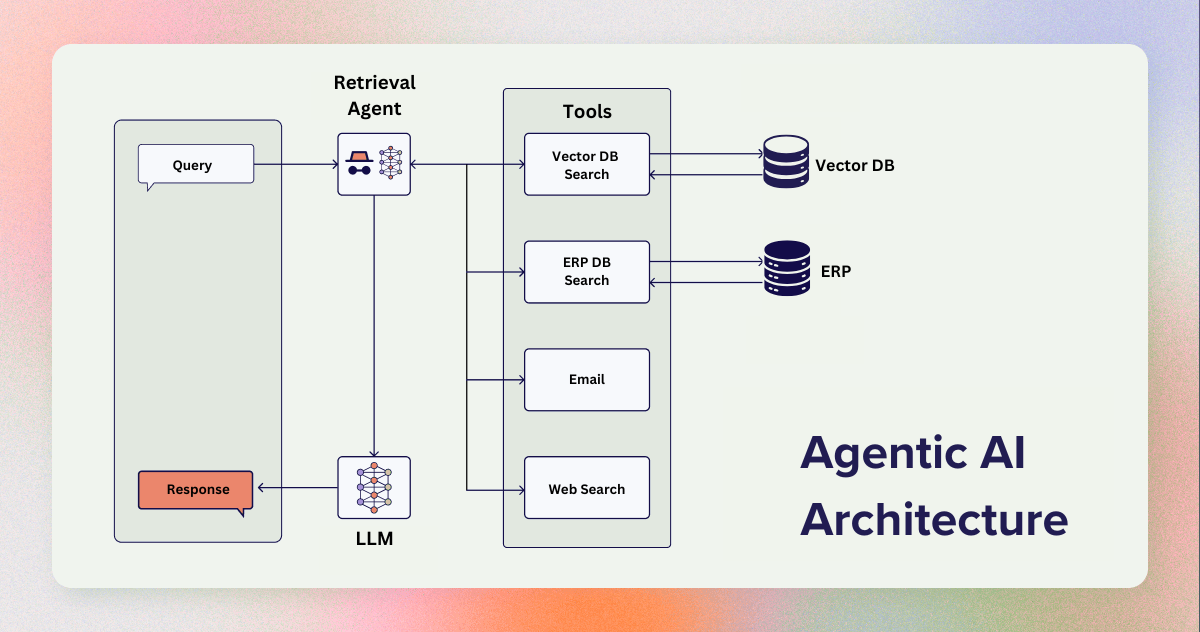

Gone are the days of simple, rule-based AI models. Today, we have Agentic AI—systems capable of autonomously retrieving, processing, and acting on data. These AI systems learn, adapt, and make decisions that can significantly impact business operations.

At the core of this intelligence lies the vector database.

Vector databases store high-dimensional representations of data—be it text, images, or audio—allowing AI models to retrieve information based on context and meaning, rather than mere keywords. This capability forms the backbone of retrieval-augmented generation (RAG), enabling AI to generate contextually relevant responses.

However, there's a pressing issue: vector databases often lack robust security measures.

Traditional databases have well-established security frameworks—encryption, access control, audit logs, and compliance tools.

But vector databases? They’re a different beast.

Unlike structured relational databases, vector databases deal with fuzzy, high-dimensional embeddings that are harder to protect. Standard security measures don’t apply because:

The result? A perfect storm for attackers.

If an AI system can access sensitive data, so can a determined attacker. The attack surface of vector databases is broad, and malicious actors are exploiting these vulnerabilities:

These threats are not hypothetical; they are occuring in real-time.

Consider a financial institution that employs an AI-powered customer service system. This system utilizes a vector database to manage and retrieve customer interaction data, enabling personalized responses and efficient service.

However, due to inadequate security measures, the vector database becomes an entry point for cyber attackers. Exploiting vulnerabilities, attackers gain access to the embeddings stored within the database.

The breach leads to:

This hypothetical scenario underscores the critical need for robust security measures in vector databases to prevent such breaches.

The AI revolution brings unprecedented opportunities, but it also introduces new security challenges. Organizations must adopt a proactive approach to AI security, integrating robust measures from the ground up.

Vector databases, while integral to advanced AI functionalities, represent a potential weak link if not properly secured. Without embedding security into the AI infrastructure from the outset, organizations risk significant data breaches and associated consequences.

The question is no longer if AI security will become a boardroom priority.

It’s when.

💡 What’s your take? Are businesses doing enough to secure AI systems? Let’s discuss.