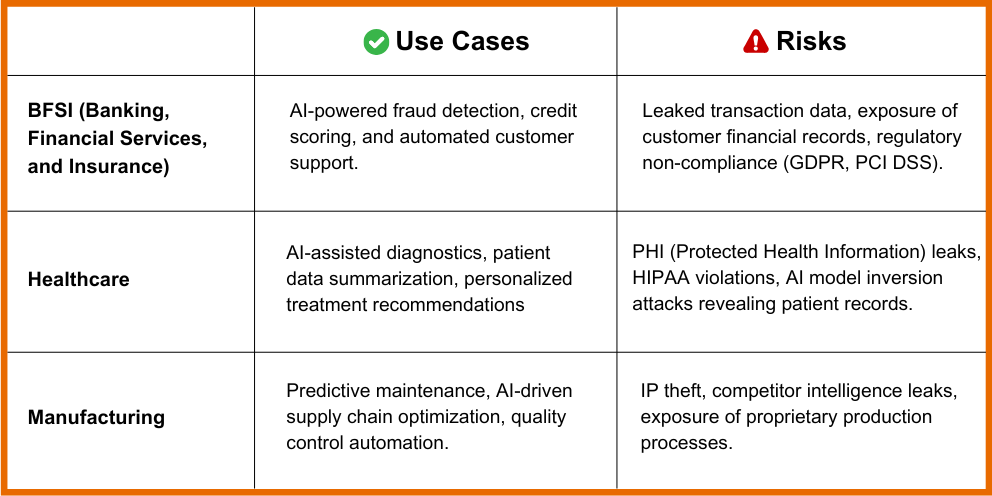

AI is no longer a futuristic concept—it's a business necessity. Organizations across industries are investing in AI to drive efficiency, enhance customer experiences, and unlock new revenue streams. Yet, despite the enthusiasm, many AI projects never make it past the pilot stage.

Why?

It's not just about budgets or technical complexity. CISOs and risk teams often become the biggest roadblocks — and for good reason.

While AI promises business transformation, it also introduces a security blind spot that traditional security frameworks weren't designed to handle.

Until that gap is addressed, AI projects will continue to stall before they can deliver value.

A leading multinational bank was ready to deploy an AI-powered customer engagement assistant. The AI would analyze customer conversations, detect sentiment, and provide personalized financial advice—potentially improving customer retention and increasing cross-selling opportunities.

The business team was all in—the project had a clear ROI, regulatory approvals, and a well-defined roadmap.

Then the CISO stepped in:

The answers weren't convincing. The bank's traditional security framework—firewalls, IAM, and encryption policies—didn't cover GenAI's risks.

The project was paused indefinitely.

It wasn't an isolated case. Many organizations face the same challenge—AI projects getting stalled not because they lack value, but because they lack security clarity.

For AI to succeed, security cannot be a roadblock—it has to be an enabler.

The good news? A mindset shift is already happening.

Security leaders are moving from saying "No, this is too risky" to "Yes, but only if AI is secured by design." This shift is critical because the cost of neglecting AI security is massive:

AI security isn't just about reducing risk—it's about unlocking AI's full potential. A security-first AI strategy means faster approvals, smoother deployments, and fewer operational disruptions.

Without security, AI investments risk becoming liabilities instead of assets. AI security isn't a bottleneck—it's the key to accelerating AI adoption.

For years, businesses have approached security as a compliance exercise—"What's the minimum requirement we need to check off?"

That mindset doesn't work with AI.

AI security isn't just about avoiding fines—it's about long-term success. Companies that prioritize security from the start gain:

Many still view AI security as a regulatory headache rather than a strategic advantage. That's a mistake.

Businesses that build AI security into their infrastructure from day one will outlast those that treat it as an afterthought. The future belongs to those who secure AI—not just for compliance, but for resilience, trust, and competitive edge.

One of the biggest reasons AI security is neglected?

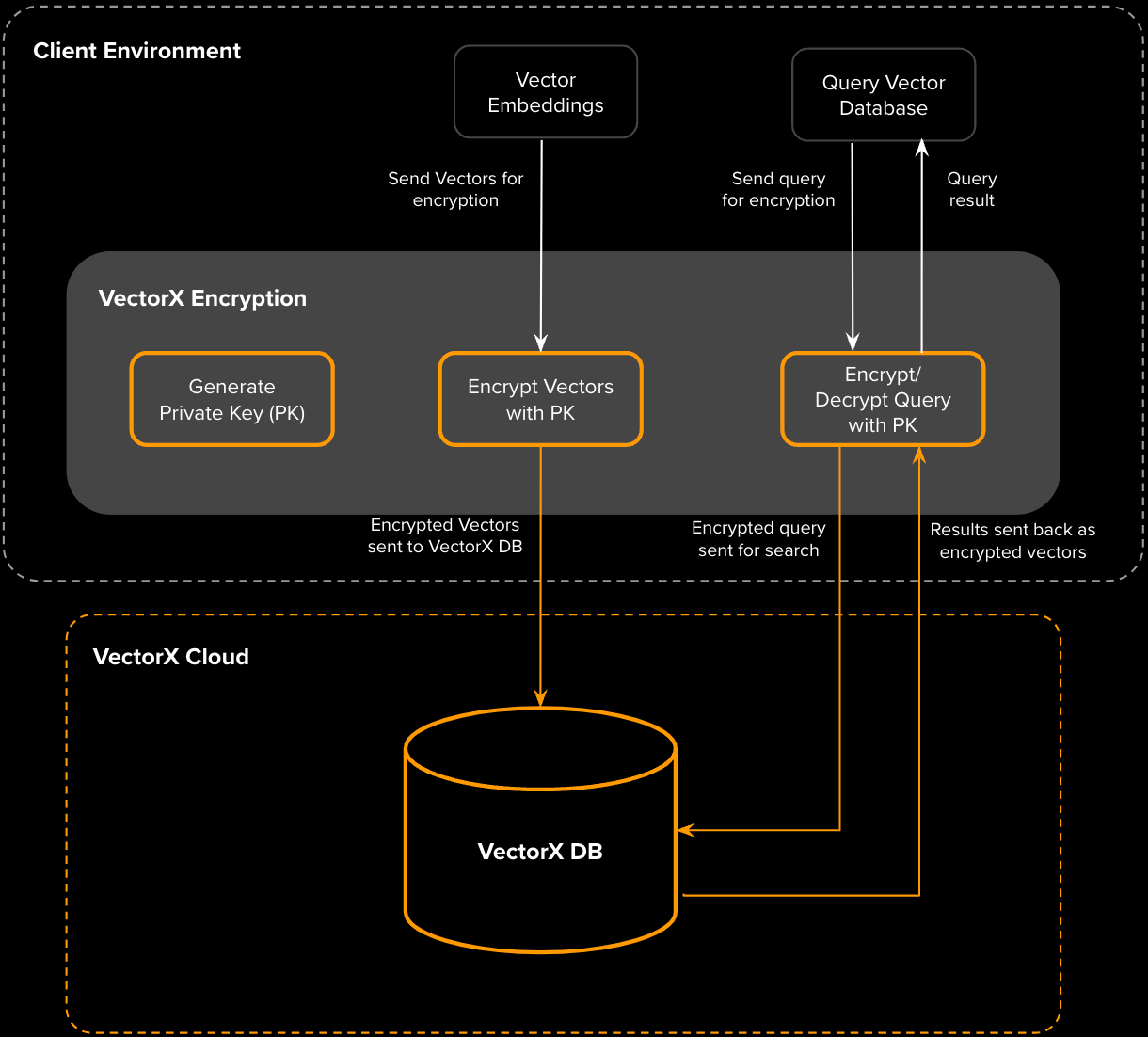

Encryption has traditionally been a double-edged sword—it protects data but slows down operations. Developers often disable encryption to keep AI applications fast and responsive. But with GenAI, unprotected vector databases are the weakest link in the security chain.

That's where VectorX changes the game.

VectorX is built for businesses that demand both top-tier AI performance and uncompromising security—without trade-offs.

With VectorX, AI teams no longer have to choose between security and speed. Now, they get both.

AI security isn't an IT problem—it's a business necessity.

Companies that treat AI security as an afterthought will eventually face the consequences, whether in the form of data breaches, compliance violations, or lost customer trust. But those who proactively build security into their AI strategy will lead the market.

The average cost of a data breach? $4.5 million.

The cost of securing your AI stack before disaster strikes? A fraction of that.

VectorX isn't just a product; it's a wake-up call. If your AI security strategy depends on 'hoping for the best,' then it's only a matter of time before you're the next cautionary tale.

The bottom line? Secure AI isn't just about avoiding risks—it's about ensuring long-term success.

Don't wait for the breach.

🔐 Are you building AI applications? What security challenges are you facing?

If you're a CISO, what would make you feel confident about approving an AI project?

Join the conversation!